Assessing AI’s place in design: Can AI do what designers do?

In the Segal Design Institute’s new Designing with AI course, students explore how and when generative AI can be responsibly used to make design better.

During the first class of DSGN 395/495: Designing with AI, Segal Design Institute instructor Liz Gerber asked her students to place themselves on a spectrum from yes to no, answering the question: “Can AI do what designers do?”

Paige Smyth (EDI ’25) put herself in the “no” category alongside most of the students in the course, determining AI could not be capable of the creative and innately human work done by designers. Having little experience with generative AI in an academic setting, Smyth held reservations about its place in the process.

At the end of the quarter, Gerber asked students to re-evaluate AI’s place in design. This time, students were more evenly spread between yes and no.

“It’s not that AI takes away our creativity in the human aspects. It actually gives us more time to do that because we can pass off busy work to AI,” said Smyth, who graduated in the winter and is now working as a service designer for generative AI experience at KPMG, putting into practice the lessons she learned in the course.

Designing the course

The course, which ran for the first time in the fall for graduate students and the winter for undergraduate students, intended to develop students’ appreciation for and understanding of AI’s place in the design process.

Gerber, who researches the roles of emerging technologies in collaborative problem solving as the co-director of the Center for Human Computer Interaction + Design the Delta Lab at Northwestern, designed the course to bring cutting-edge technologies to the classroom and asked students to work constructively with AI while simultaneously identifying areas for improvement.

Students read articles and watched videos by thought leaders exploring the application of AI in problem solving in fields ranging from aerospace to entertainment. Gerber also brought in Northwestern alumni and AI researchers every class to discuss the practical applications of this emerging technology.

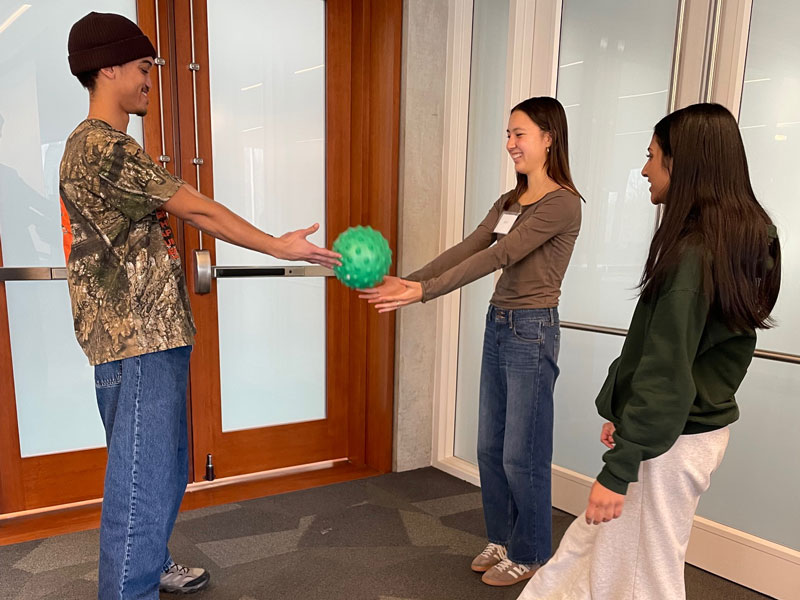

These experiences prepared students for collaborative in-class exercises, where teams conducted experiments using either AI or traditional methods to research, brainstorm, and prototype. After each activity, they reflected on AI’s role in different design phases, how their experiences met or challenged their expectations, and how they might use—or choose not to use—AI in future design work.

“It was really memorable. I thought that because our team was using physical modeling techniques we would have a much easier time because we could do anything we wanted, even if it didn't look perfect,” Smyth said. “In the end, it was actually much closer, because what physical modeling didn't have in fidelity, the AI prototypes did.”

Embracing the gray areas of AI

Alongside her AI gut check to begin the course, Gerber asked students to categorize AI as either good or bad. At first, many fell staunchly into one side or the other. However, by the end, most students fell in the middle.

“Use of AI can increase productivity, automate repetitive tasks, enhance decision making, personalize systems, generate ideas, increase accessibility, and advance innovation, but it can also reinforce biases, displace jobs, threaten privacy, propagate misinformation, dehumanize, and have negative environmental impacts,” said Gerber, professor of mechanical engineering and (by courtesy) computer science at Northwestern Engineering and professor of communication studies in Northwestern’s School of Communication. “Students didn’t believe AI was either all good or bad because they now deeply understood its strengths and weaknesses.”

“Use of AI can increase productivity, automate repetitive tasks, enhance decision making, personalize systems, generate ideas, increase accessibility, and advance innovation, but it can also reinforce biases, displace jobs, threaten privacy, propagate misinformation, dehumanize, and have negative environmental impacts,” said Gerber, professor of mechanical engineering and (by courtesy) computer science at Northwestern Engineering and professor of communication studies in Northwestern’s School of Communication. “Students didn’t believe AI was either all good or bad because they now deeply understood its strengths and weaknesses.”

To get to the root of ethical concerns when using AI, students examined ethical frameworks and industry guidelines, roleplaying a company using AI in its product development process. Taking on roles in the company ranging from executives to ethicists to product designers, the class got to the core of questions surrounding training and testing for bias, responsibility when using AI in design, data usage and security, and accessibility.

“Just as recognizing the broader, social, technological, and environmental contexts is critical for design solutions that work within and influence larger systems, so is this recognition needed for using AI in design work,” Gerber said.

The future of AI in design

Smyth predicts the lessons she learned in DSGN 395/495 will help her tremendously in her role at KPMG, one of the “Big Four” professional services networks.

“When designing things for end users that use AI, trust matters the most. Being transparent about when AI is being used and how it's being used can make people more trustworthy of it,” Smyth said. “I’ll definitely be using a lot of those principles.”

Smyth’s takeaways and plans to put them into action are exactly what Gerber envisioned for her students.

“I hope the students feel empowered to create a future of work they want rather than the one handed to them,” said Gerber. “Given the rapid pace of technological change, it can be easy to be overwhelmed and do what others around them are doing. Northwestern students have the opportunity to be leaders through their thoughtful creative and critical use of generative AI in their work.”