Visualizations of AudioNoah Liebman works with Collablab and Delta Lab to develop visualization of audio by applying computational model

Contribution

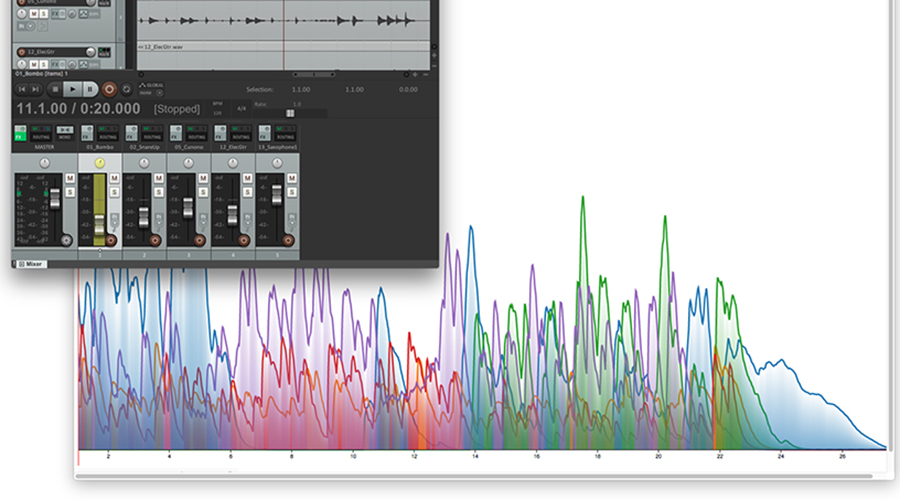

We are working with Collablab and Delta Lab at Northwestern to develop visualizations of audio that more closely represent what someone actually hears. We do this by applying computational models of how the human auditory system works, which can predict how people perceive sound. We’re doing this to help audio engineers more efficiently mix multitrack audio, but the concept of visualizing models of perception extend beyond this application, and beyond just sound.

We are working with Collablab and Delta Lab at Northwestern to develop visualizations of audio that more closely represent what someone actually hears. We do this by applying computational models of how the human auditory system works, which can predict how people perceive sound. We’re doing this to help audio engineers more efficiently mix multitrack audio, but the concept of visualizing models of perception extend beyond this application, and beyond just sound.

Problem

Each of the millions of music recordings released every year has to be recorded, edited, mixed, and mastered. However, the designs of audio production tools are largely drawn from decades-old analog equipment. We are designing new tools for multitrack mixing that enable users to understand how their actions affect the overall sound of a mix.

Approach

We are using a user-centered design process. We started by conducting about 20 hours of interviews and observations with ten audio engineers. We learned a lot about representations of sound (visual, auditory, and controls) and how they are used in the mixing process. We are now creating functional prototypes of designs based on that user study, and will be experimentally validating them. With the experiment we hope to show an improvement in mixing speed and user satisfaction compared to existing tools.